LESS IS MORE

Why Statistics is important. Why collecting only some of the data is often better than collecting all of it. And the role Probability plays in data collection.

If you have a question — about anything — you are likely to need to collect data to answer it. I mean this in the most general possible sense. Imagine yourself as Alexa or Siri: when someone asks a question, what does the software do? It looks things up in databases.

So data is at the heart of pretty much any form of inquiry. This is why data science — Statistics — is so important.

And it raises an immediate question: Do we collect all of the data, or only some of the data?

It seems obvious that the answer to this question would usually be “We should collect all of the data.”

There are reasons, however, why this is frequently either a bad idea, or is simply not even possible.

First, you may not know where all of the data is. Or, even if you do, there may be far too much of it.

How many hours, on average, do A level students spend on homework per night?

How would you go about collecting all of the data in order to answer that question? Is there a way to know who all of the A level students are? Even if you do, how would you collect data from all of them? (You know who they are, but do you know where they are?)

A related issue is time and expense: even if you can access all of the data, do you have the resources to do so? It is probably possible to construct a list of every A level student studying in Harrogate. (Such a list is called a sampling frame.) But there will be hundreds of them. Do I have time to interview them all? Is it practical to distribute questionnaires to them all? And then collect them all back? And that’s only the beginning: I then have to collate the data — enter it all into a spreadsheet, perhaps — and then analyse it.

If I’m the government or a big corporation, maybe I do have the resources to do all of that. But, before I sign off on the budget, I should ask myself, “How important is this research?" Do I really need absolutely all of the data?

In principle, if I do have all of the data then I can answer my question exactly and with certainty. But if I don’t have all of the data then there will be uncertainty associated with my conclusions. This is where probability theory comes in: probability is about understanding and dealing with uncertainty. And — done right — it’s very good at it. The outcome of any given roll of the dice in Las Vegas is uncertain, but the casinos make huge profits despite this. As do banks and insurance companies, all of whom have to deal with a lot of uncertainty.

If I do collect all of the data my answer to the homework question might be something like, “On average A level students in Harrogate spend 83.2 minutes per night on homework.” Whereas, if I only collect some of the data, my answer might be be more like “They spend on average between 75 and 90 minutes per night on homework.” That second answer is less precise, but it might nonetheless be good enough. After all, I should always be thinking “Why am I asking this question in the first place? What am I going to do with the answer?”

Now, I said the second answer is “less precise”. Does that mean the first answer is exactly right? In fact it may well not be. Imagine there are 1,537 A level students in Harrogate. I am surely not going to collect data from them all personally. I’ll have assistants or employees or volunteers. I’ll maybe rely on schools to distribute questionnaires. The schools will give the questionnaires to form tutors to hand out. Jonny is not in school today, so Jenny is going to give him his questionnaire the next time she sees him. If she remembers. Then somehow all the questionnaires have to get back to me.

How many people in this chain actually care about my data collection? I know I do. But do any of the others? Even the people I pay to help out will be less invested in the precision of the answer than I am. Besides, I’m only paying them minimum wage. They’ll miss things out, they’ll make things up. And the guy I’m paying to type all the data into a spreadsheet doesn’t really care, either. He’ll make mistakes. I told him to double-check it all, but he probably won’t. How would I know that he had?

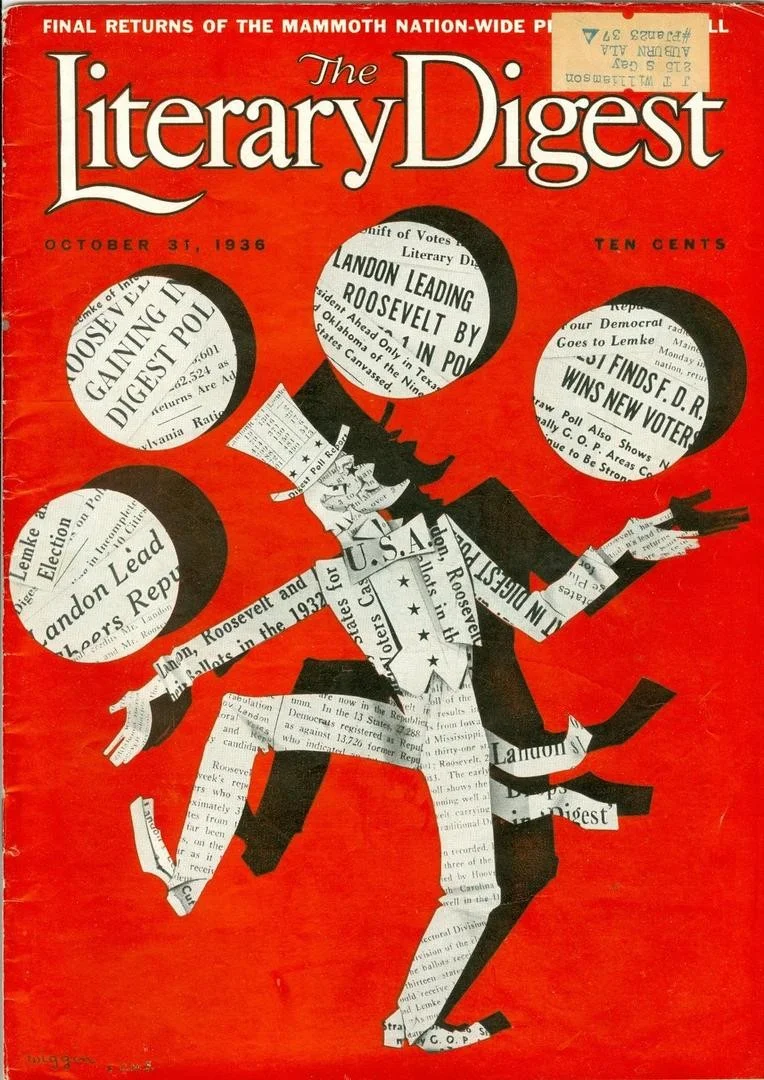

The key issue with data collection is not data quantity: it is data quality. One of the most notorious examples of getting this horribly wrong is the Literary Digest poll of 1936.

If you click on the words Literary Digest in the previous paragraph it will take you to Wikipedia’s description of the polling fiasco that led to the demise of that publication. But, in brief, a much respected magazine surveyed ten million Americans in 1936 in order to predict the outcome of that year’s presidential election. It had done this in the previous five such elections and its prediction had been correct each time. Meanwhile, an unknown pollster, George Gallup, collected data from only 50,000 people. The Literary Digest’s prediction was wildly wrong. Gallup’s was broadly correct. The Literary Digest went bust, while Gallup became famous and his polling organisation is still active and well-known today.